The Magic (and Limits) of Human Visual Perception

We'll look at the often limited ways we perceive the world and how those limitations allow for experts and algorithms to bypass our cognitive filters.

This article is derived from the original article "The Eyes are the Prize (Advertising's Holy Grail)" by Avi Bar-Zeev, published on the website Motherboard.com in 2019 and then updated and expanded on Medium.com

How does Human Vision Work (and Not Work)?

Starting at the most basic level, our retinas sit in the back of our eyes, turning the light they receive into signals our brain can interpret, so that we can “see.”

Our retinas have specialized areas, evolved to help us survive.

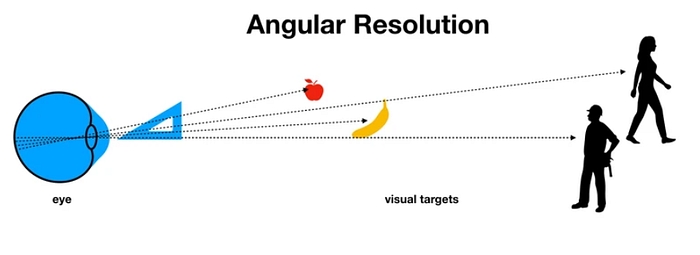

The highest-resolution areas, called “foveae,” cover a very small circle, reaching about five degrees from center.

Most of your vision is called “peripheral,” going out to about 220 degrees. The peripheral areas are good at sensing motion and important survival patterns like horizons, lurking shadows and other pairs of eyes looking back at us. But in the periphery, surprisingly, we see very little color or fine detail.

All of the words on this page hopefully look sharp. But your brain is deceiving you. You’re really only able to focus clearly on a word or two at any given time.

To maintain the illusion of high detail everywhere, our eyes dart around, scanning the scene like a flashlight in the dark, building up a picture of the world over time. The peripheral detail is mostly remembered and imagined.

Those rapidly darting eye motions are called “saccades.” Their patterns can tell a computer a lot about what we see, how we think, and what we know. They show what we’re aware of and focusing on at any given time. In addition to saccades, your eyes also make “smooth pursuit” movements, for example while tracking a moving car or baseball. Your eyes make other small corrections quite often, without you noticing.

Computers can be trained to track and understand these movements. The better systems are able to distinguish between two or more visual targets that are only half a degree apart, as measured by imaginary lines drawn from each eye. But even lower-precision eye-tracking (1–2 degrees) can help infer a user’s intent and/or health.

Seeing Everything Live vs. Recalling from Short-Term Memory

If you temporarily suppress your own saccades, as in the above video experiment, you can begin to understand how limited our peripheral vision really is. By staring at the central dot, your brain is unable to maintain the illusion of “seeing” everywhere at once. Your perception and memory of the periphery starts to quickly drift, especially when the scene changes to two new faces, causing a temporarily grotesque appearance for even the “world’s sexiest” people. No, the video didn't suddenly get ugly, it's your brain not keeping up with the information on screen (until you foveate the new faces).

It turns out that your gaze can also be externally manipulated to a surprising degree.

Since saccades help us take in new details, any motion in our local environment may draw our gaze. In the wild, detecting such motion could be vital to survival.

Illusionists and magicians use this feature to distract us from their sleight of hand, moving their hands dramatically to draw our gaze. Even more subtly, our eyes will naturally follow the magician’s own gaze, as if to see what they see. This is a very common social behavior called “joint attention.”

Luring our gaze is also useful for storytelling, where movie directors can’t aim and focus our eyes like they might for a camera on the movie set. They count on us seeing what they intend us to see in every shot.

Visual and UI designers similarly design websites and apps that try to constructively lead our gaze, controlling placement, whitespace, and font sizes so that we might notice the most important UI elements first and understand what actions to take more intuitively than if we had to scan everywhere and build a mental map.

Remarkably, we are essentially blind while our eyes are in saccadic motion. This blindness is useful, as the world would otherwise appear blurry and confusing quite often. These natural saccades happen about once a second on average. And we almost never notice.

During this frequent short blindness, researchers can opportunistically change the world around us.

Like magicians, they can swap out one whole object for another, remove something, or rotate an entire (virtual) world around us. If they simply zapped an object, you’d likely notice. But if they do it while you blink or are in a saccade, you most likely won’t.

That last bit may be useful for future Virtual Reality systems, where you are typically wearing an HMD in an office or living room. If you tried to walk in a straight line in VR, you’d likely walk into a table or wall IRL. “Guardian” systems try to prevent this by visually warning you to stop.

With “redirected walking,” you’d believe that you’re walking straight, while you are actually being lured on a safer cyclical path through your room, using a series of small rotations and visual distractors inside the headset.

In this last video example, we can see the experimenter/host literally swaps himself out for someone else, mid-conversation, on a public street. With suitable distractions, the subject of the experiment doesn’t notice.

The bottom line is that we see much less than we think we do.

Last updated